Nuclear Strategy and the Limits of Deductive Reasoning

How to calculate nuclear risks (and how not to)

Picture: U.S. Air Force / Ebensberger

How do we assess nuclear risks? The go-to method in popular discourse—and arguably among decision-makers—is to quantify risk levels by assigning a specific percentage likelihood of nuclear weapons being used.

The outgoing U.S. administration appears to have favored this approach as well. In March 2024, it was reported that the Biden Administration assessed the risk of nuclear use during the Kharkiv offensive in Fall 2022 as high as 50 percent. More recently, Secretary of State Blinken, when asked about the seriousness of Putin’s nuclear saber-rattling, emphasized how even a shift from five to 15 percent in the risk of nuclear use constitutes a significant increase.

In the following paragraphs, I will explain why such assessments are fundamentally flawed. Importantly, my critique is not directed at the percentages themselves (which I don’t agree with in any case) but at the methodology underpinning these assessments.

Assigning crude percentages to the likelihood of nuclear use fails to account for the methodological limitations inherent in discussions of nuclear strategy. Given that these numbers have been instrumentalized to justify withholding essential aid to Ukraine, this issue goes beyond academic debate.

Studying nuclear use

In academic terms, nuclear use represents what we refer to as a ‘binary’ variable. This means that the variable can only assume a value of 0 or 1, with no gradations in between. You can’t use nuclear weapons ‘a little’ or ‘50 percent’; either they are used, or they are not.

When studying the likelihood of nuclear use, the latter constitutes the ‘dependent’ variable, i.e., the phenomenon we seek to explain. Whether this dependent variable takes on the value of 0 or 1 (non-use or use) depends on a range of ‘input’ variables, sometimes also referred to as ‘explanatory’ or ‘independent’ variables.

In the Russia-Ukraine case, these input variables could include factors such as the battlefield situation, the extent of outside assistance to Ukraine, Russian regime stability, Ukrainian support for the war effort, Russia’s economic situation, and more. The idea is that as these input variables assume specific values, they can help us, using appropriate statistical tools, predict the likelihood of the dependent variable (nuclear use) taking a value of 0 or 1 (non-use or use).

This approach underpins research designs employing logistic regression models. These statistical models use a set of input variables to make (or attempt to make) statistically significant predictions about the dependent variable’s value as the values of the input variables vary.

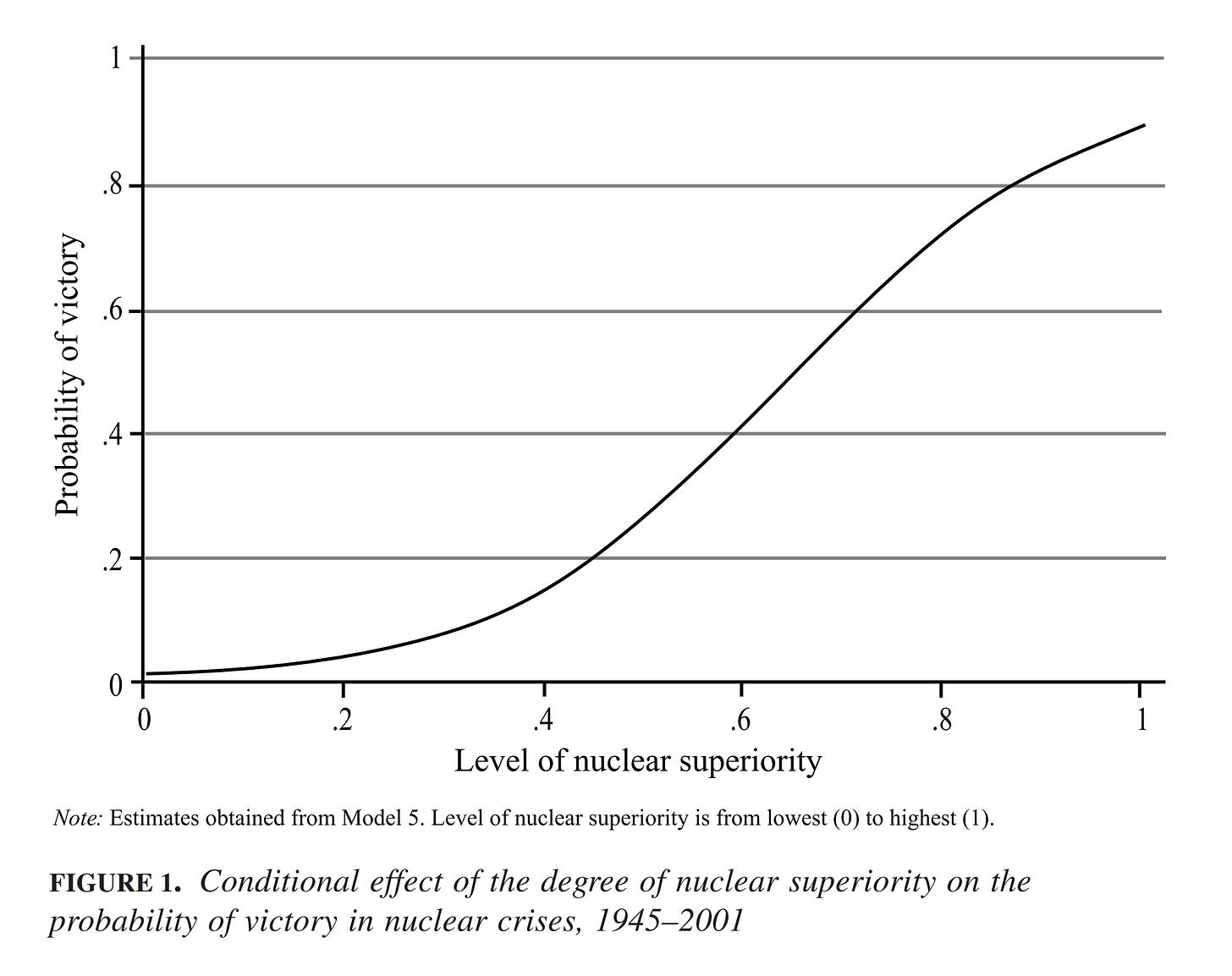

For example, a notable yet controversial study in nuclear strategy argues that nuclear superiority (having more nuclear warheads than one’s adversary) significantly predicts a state’s likelihood of winning a nuclear crisis, even within conflict dyads characterized by mutually assured destruction (MAD). The graphic below, from Matthew Kroenig (2013), illustrates this relationship: as the level of nuclear superiority increases, so does the probability of winning the nuclear crisis.

The confidence we can place in such models largely depends on the relevance of the variables included in the model and the number of observations available. Ideally, we want to include thousands of observations, but with appropriate statistical tools, reliable inferences may sometimes be drawn from far fewer observations, though this would reduce our confidence in the findings.

What does this mean for predicting the likelihood of nuclear use in Ukraine? Theoretically, a logistic regression model could be built to compute the likelihood of nuclear use by incorporating relevant input variables and constructing a dataset.

Unfortunately. this is unfeasible in practice, primarily because the required number of observations to construct a large-n dataset does not exist.1 Nuclear weapons have only been used twice, in highly specific circumstances in 1945, which falls far short of the data needed to generate a dataset that can be used to arrive at statistically significant inferences. Thus, using statistical tools, we cannot confidently predict when nuclear weapons would be used.

Nuclear use is not a random event

The considerations above also highlight why the types of predictions regarding the likelihood of nuclear use often found in popular discourse—and even among decision-makers—typically fall short of valid analysis.

For instance, stating that there is a 50 percent likelihood of nuclear use, as the Biden Administration reportedly assessed during the Kharkiv offensive, implies that, all else being equal, nuclear use would occur in one out of two cases. In other words, assuming these odds, if we could repeat the Kharkiv offensive as an ‘experiment’ under identical conditions, we would statistically expect nuclear weapons to be used in half of the iterations.

However, nuclear use is not a random event. It does not follow the same logic as drawing black and white marbles from a bag with predetermined probabilities.

The value of the dependent variable—nuclear use—depends entirely on the values of the input variables. This means that if we could go back in time and repeat the Kharkiv offensive under the exact same conditions (i.e., no changes in the input variables occur) multiple times, there is a very high chance that we would see the same outcome every time, as long as the input variables remain constant.

Therefore, suggesting that the likelihood of nuclear use is five, 15, or 50 percent may not only exaggerate nuclear risks but also fail to provide a valid inference about the conduct of nuclear strategy.

Deductive inference

In light of the above, can we make any valid inferences about the likelihood of nuclear use? Yes, but it’s crucial to recognize their limitations.

The most important method is what we call ‘deductive inference,’ which does not rely on large-n datasets or comparative case studies (since we lack sufficient data). Instead, it uses logical reasoning to draw specific conclusions from general principles or established facts.

For example, from previous episodes of intense nuclear crises—such as the Cuban Missile Crisis or the 1983 Able Archer incident—archival evidence shows that state leaders, the ones that ultimately bear responsibility for nuclear use, are profoundly hesitant to move in that direction due to fears of political and military repercussions, even as nuclear crises intensify to extreme levels. At the same time, we can logically infer that nuclear use may become more likely if a nuclear-armed state faces increasingly severe existential threats.

This example illustrates how deductive inference, limited in scope as it may be, can allow for an informed analysis of nuclear strategy, or at least provide certain insights. However, it also demonstrates that while decision-makers may seek quantifiable and sweeping risk assessments, we should approach such assessments with skepticism. We simply lack the data. The same caution applies to the public when encountering these analyses, especially when they are instrumentalized to support particular agendas.

Another issue is that we may not fully understand which input variables are relevant. While we can make educated guesses about the factors that might explain nuclear use or non-use, this remains a subject of ongoing academic debate.

Agreed. The most cogent justification for nuclear use I’ve seen involved the Russians suffering a massive battlefield defeat (ie another Kharkiv offensive) followed by the desire to “freeze” current battlefield lines.

The possibility of that kind of Ukrainian breakthrough seems extremely unlikely at this point. Yes, the Russians have pretty much used up most of their Soviet stock of APCs, tanks, artillery, etc. but Ukraine doesn’t have massive reserves of people and materiel either.

The main reason not to use nukes is simply that the Russian trading partners like China are strongly opposed. Never mind how quickly the Russian army, Air Force, etc. would cease to exist if NATO took a more active interest to eliminate same.

The overconfidence with which these models are used by some is striking indeed. The small number of observations problem is even worse in a way, since, thinking of WW2, we have 1 observation of use without having seen some of the actual effects, 1 observation of use having seen some of the effects, and 0 observations having seen most of the effects. Which seems psychologically relevant. Speaking of which, type of weapon and target should be relevant psychologically, too. Not to speak of how fundamentally different models of human decision-making predict different things, as do RAM vs. prospect theory.